When we interact with Chat GPT, Claude, or Gemini, it’s easy to forget that these systems are built on mountains of carefully processed text. Behind every fluent answer lies a multi-stage pre-training pipeline that transforms raw internet data into structured tokens ready for neural networks.

In this article, we’ll unpack how this process works — from massive web crawls like Common Crawl to tokenization strategies like byte pair encoding (BPE).

Stage 1: Pre-Training Data Collection

The first stage in building an LLM is data acquisition and preprocessing. Models don’t learn in the abstract — they need high-quality, diverse text.

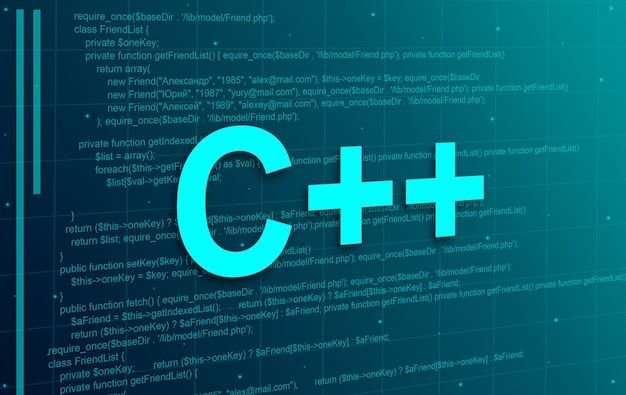

A leading example is Hugging Face’s Fine Web dataset, designed as a benchmark for open LLM training. Fine Web illustrates the kind of data pipelines all major players — OpenAI, Anthropic, Google — operate internally.

The goal:

Quantity : billions of tokens of text.

Quality : reliable, informative documents.

Diversity : news, literature, scientific text, conversations, and more.

Interestingly, after aggressive filtering, the dataset size is only around 44 TB. That might sound massive, but in today’s terms, it fits on a handful of hard drives — a reminder that the web is vast, but most of it is noise.

Stage 2: Raw Collection — Common Crawl

The backbone of most large-scale datasets is Common Crawl, a non-profit project that has been crawling and indexing the web since 2007.

As of 2025, it has indexed over 2.38 billion web pages.

Additionally, Common Crawl’s corpus overall spans “over 300 billion pages” across its history.

It works by starting from seed pages, then recursively following hyperlinks.

The raw data is stored as HTML snapshots.

This raw form is messy — full of ads, navigation bars, JavaScript, and markup. To make it usable, we need multiple filtering steps.

Stage 3: Filtering & Cleaning

Turning raw HTML into usable training data requires extensive preprocessing. Here are the key stages:

URL Filtering : Remove entire categories of sites: malware, spam, SEO farms, adult content, hate speech, and more.

Text Extraction : Strip away HTML tags, CSS, and navigation junk. What remains is the human-readable text.

Language Filtering : Use classifiers to keep only desired languages. For example, Fine Web keeps pages with at least 65% English text.

Deduplication : Remove near-identical pages (e.g., mirrored Wikipedia dumps).

PII Removal : Detect and filter personally identifiable information like addresses or SSNs (Social Security Numbers).

The result: clean, diverse, high-quality text that better reflects the patterns of natural language.

Stage 4: Representation as Sequences

Neural networks don’t read words the way humans do. They expect input as sequences of discrete symbols.

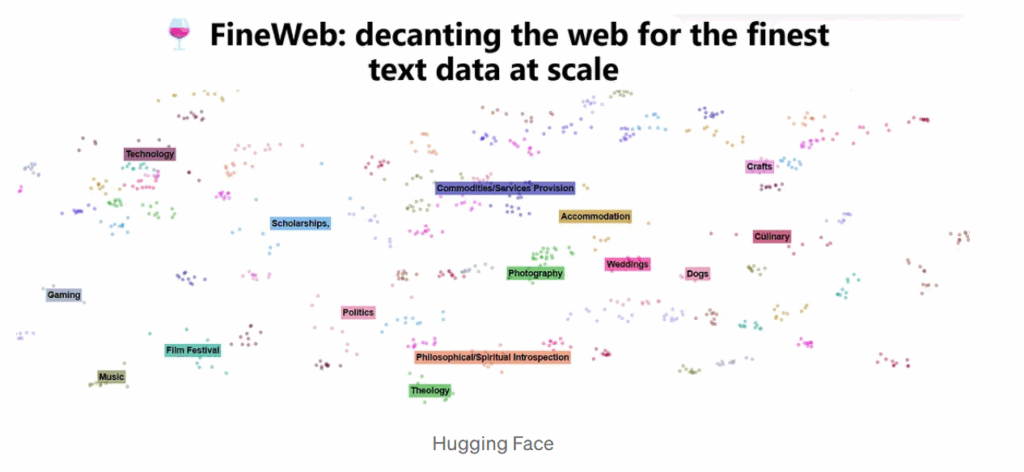

From Raw Text to Bits

At the lowest level, text is stored as binary (0s and 1s). For example, UTF-8 encoding translates every character into 8-bit chunks.

From Bits to Bytes

Instead of working with just 0s and 1s, we can group them into bytes (8 bits), giving 256 (2⁸) possible symbols. This shortens the sequence length by 8x.

But bytes are still inefficient. We need a smarter approach.

Stage 5: Tokenization with Byte Pair Encoding (BPE)

Modern LLMs rely on Byte Pair Encoding (BPE) to compress sequences further:

Start with all text split into individual bytes.

Find the most frequent consecutive pairs (e.g. 116 32 for “t_ (t and space)”).

Merge them into a new symbol with a unique ID.

Repeat until reaching the target vocabulary size.

For example:

“hello world” : tokenized differently depending on casing and spacing.

Sometimes it’s two tokens: [hello] [world].

Sometimes three: [hel] [lo] [world].

This flexibility helps models handle rare words and sub-words effectively.

Vocabulary Size

State-of-the-art models typically settle around 100,000 tokens.

GPT-4, for instance, uses 100,277 tokens.

This balance ensures that:

Sequences aren’t too long (reducing compute cost).

The vocabulary is large enough to capture nuances in text.

Stage 6: From Tokens to Neural Nets

Once tokenized, text becomes a one-dimensional sequence of IDs. These IDs are the actual input to the transformer network.

Example:

“Hello world” : [15339, 1917]

The model learns embeddings for each token and builds contextual meaning across the sequence.

At this stage, pre-training can begin — billions of sequences fed into the network, gradually shaping its ability to model human language.

Key Takeaways

Pre-training pipelines rely on web-scale data, but only after heavy filtering and cleaning.

Common Crawl is the backbone of most open datasets.

Tokenization is crucial: without efficient compression, sequence lengths would be unmanageable.

Modern models like GPT-4 use ~100k token vocabularies to strike a balance between expressiveness and efficiency.

Final Thoughts

The journey from raw internet data to tokens is a story of engineering discipline. Each stage — crawling, filtering, deduplication, tokenization — is designed to extract maximum signal while minimizing noise.

The next time you type a prompt into an LLM, remember: before the network could generate a single word, an enormous pipeline of systems had to transform messy web pages into structured, compressed tokens — the true raw material of intelligence.