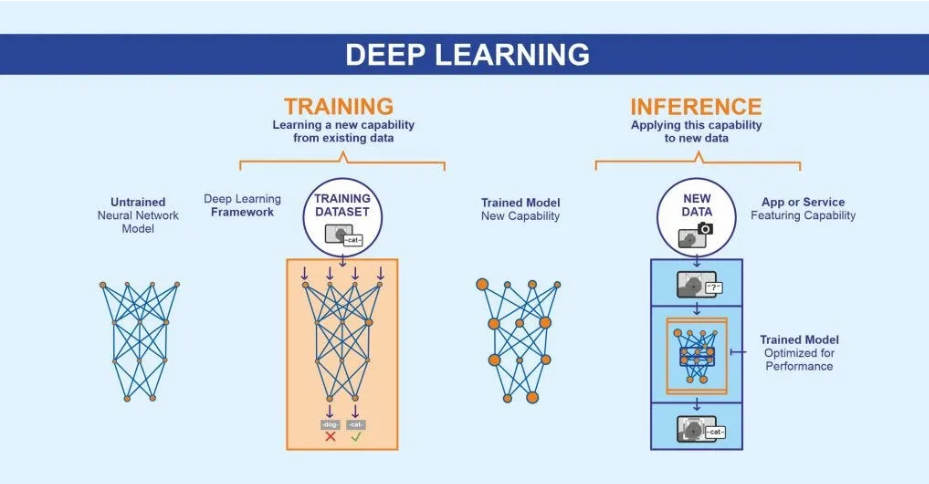

In Part 2, we unpacked how large language models (LLMs) learn during training — billions of tokens fed into neural networks, shaping parameters that capture patterns of human language. But once training is complete, what happens next?

That’s where inference comes in — the stage where an LLM finally puts its knowledge to work, generating new text in response to your prompt. In this article, we’ll dive into how inference works, why it’s inherently probabilistic, and why even small design choices here can dramatically change user experience.

What Is Inference?

Inference is the process of using a trained model to generate text. Unlike training, where weights are constantly updated, inference works with fixed parameters — the “frozen” knowledge the model learned during training.

When you type into ChatGPT or Claude, you’re not helping the model learn more. You’re simply triggering inference: feeding tokens in, and letting the model predict what comes next.

Step 1: Starting With a Prompt

Inference begins with a prefix of tokens — your input prompt.

Example:

Prompt: “Once upon a time”

Tokens: [91, 860, 287]

The model then asks: what token is most likely to come next, given this sequence?

Step 2: Probability Distributions

For each step, the model outputs a probability distribution across the entire vocabulary.

Example:

Next-token probabilities:

[“prince”=0.31, “king”=0.22, “dragon”=0.07, “cat”=0.01, …]

Rather than always picking the top token (“prince”), the model samples from this distribution. This is where inference gets interesting — it’s like flipping a weighted coin.

High-probability tokens are more likely to be chosen.

But there’s always some chance a lower-probability token appears.

This stochastic nature is why responses feel creative and varied, not rigid.

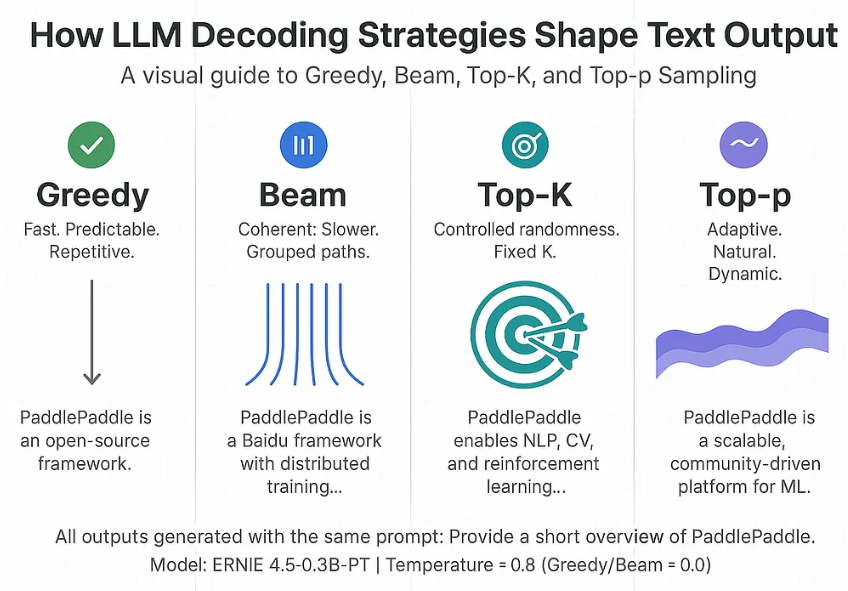

Step 3: Sampling Strategies

Different decoding strategies shape how outputs feel:

Greedy decoding → Always pick the highest probability token. (Safe but repetitive.)

Top-k sampling → Sample only from the top k tokens. (Balances diversity and coherence.)

Nucleus sampling (top-p) → Sample from the smallest set of tokens whose combined probability ≥ p. (Dynamic, often used in practice.)

Temperature scaling → Adjusts randomness. Low temp = focused, high temp = creative.

Together, these parameters define whether a model sounds formal, imaginative, or chaotic.

Step 4: Iterative Generation

Once a token is chosen, it’s appended to the sequence, and the model repeats the process.

Example sequence:

Prompt → [91]

Next token → [860]

Next token → [287]

Next token → [13659] (“article”)

… and so on.

Over time, the model generates a full passage — not by copying the training data, but by remixing learned statistical patterns into new sequences.

Stochastic Creativity vs. Memorization

An important point: inference is not database lookup. The model doesn’t regurgitate full documents. Instead, it assembles outputs inspired by the training data.

Sometimes, it will reproduce small phrases seen in training.

More often, it generates novel sequences that share statistical properties with its training distribution.

This is why LLMs can “hallucinate” — producing plausible-sounding but factually incorrect text. They’re predicting patterns, not consulting a factual database.

A Historical Example: GPT-2

A great case study is GPT-2, released by OpenAI in 2019.

It was the first model where the modern stack of transformers, large-scale pre-training, and probabilistic inference all came together.

At 1.5B parameters, it was considered huge at the time — yet tiny compared to GPT-4.

Its ability to generate coherent text shocked the AI world, sparking debates about releasing models publicly.

Everything we see today in GPT-4 or Claude 3.5 builds on the foundation of GPT-2 — the architecture hasn’t fundamentally changed, only the scale and fine-tuning methods have.

Training vs. Inference: A Quick Recap

Training → Adjusts billions of weights by predicting the next token across massive datasets.

Inference → Uses those fixed weights to generate new sequences in real time.

Key difference → Training is compute-heavy and happens once; inference is lightweight and happens every time you chat with a model.

Key Takeaways

Inference is the generation phase of LLMs, powered by next-token prediction.

Outputs are probabilistic, shaped by sampling strategies like top-k and temperature.

What you read in ChatGPT is not memorized training text, but statistical remixes.

GPT-2 was the first recognizably modern LLM, paving the way for today’s giants.

Closing Thoughts

When you type a message into ChatGPT or Gemini, you’re not tapping into a live learning system. You’re triggering inference — billions of frozen parameters playing out the probabilities of language.