In previous parts of The LLM Journey, we covered:

Part 1: How raw internet text becomes tokens.

Part 2: How neural networks learn to predict the next token.

Part 3: How inference turns frozen weights into generated text.

Part 4: How GPT-2 is trained and why GPUs became the AI gold rush.

Now, we will explore base models, their releases, and how they form the foundation for LLM assistants.

The Computational Workflow

Training modern LLMs is extremely compute-intensive:

The more GPUs you deploy, the more token sequences you can predict in parallel.

Faster token processing : quicker iteration : ability to train larger networks

Large clusters are necessary because scaling is not just linear: doubling GPUs can significantly accelerate training.

This explains the massive infrastructure in AI labs. Elon Musk’s reported acquisition of 100,000 GPUs highlights the scale: each GPU is costly, power-hungry, and specialized for predicting the next token in sequences — the fundamental LLM task.

Base Models: Token-Level Simulators

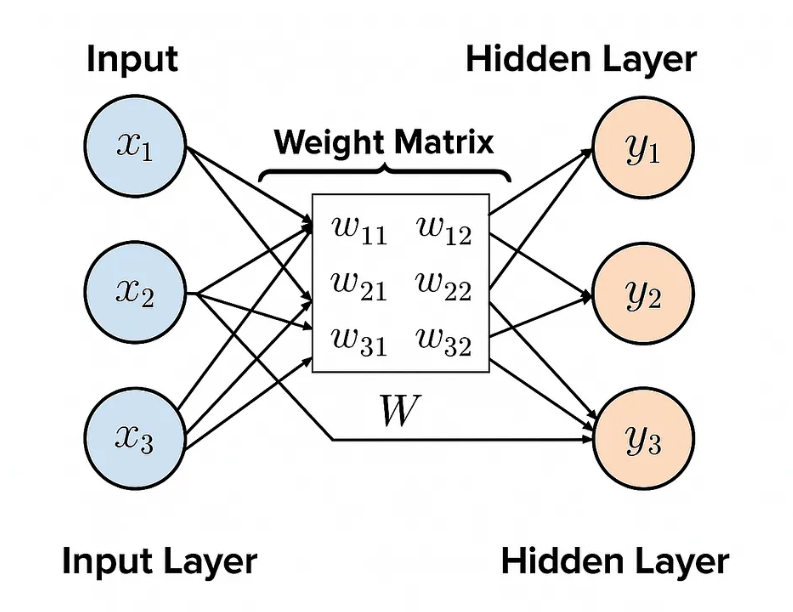

One way to conceptualize a base model is as a lossy compression of the internet. The hundreds of billions of parameters serve as a probabilistic memory of online text. High-frequency facts and concepts are more reliably encoded than rare or niche knowledge. As such, prompting the base model can elicit useful information, though it is statistical, not deterministic.

A base model is essentially a token-level internet document simulator:

Predicts the next token in a sequence based on training data.

Stores statistical patterns of web documents in billions of parameters.

Not yet an assistant; it cannot reliably answer questions or follow instructions.

Understanding Base Model Behavior

Stochastic Token Generation

Base models are probabilistic systems: for the same prompt, multiple outputs are possible. This is because token predictions are sampled from a probability distribution rather than deterministic selection.

Regurgitation / Memorization

High-quality, frequently seen data (like Wikipedia) can be memorized, leading to near-exact reproductions. This is called regurgitation:

Observed when pasting the first sentence of a Wikipedia page into a base model : it can reproduce the text almost verbatim.

Occurs because such documents are oversampled or repeated during training.

While impressive, this is usually undesirable in final assistants, as models should synthesize rather than copy.

Probabilistic Knowledge of the World

When prompted with information outside the training cutoff (e.g., events in 2024), the base model will hallucinate:

It predicts the next token based on statistical patterns, producing “parallel universe” scenarios.

Each sampling can generate a different plausible continuation.

Practical Use: Few-Shot & In-Context Learning

Base models can already perform practical tasks without fine-tuning using in-context learning:

Provide examples in a prompt (e.g., English : Korean translations).

The model infers the underlying pattern and continues it.

This is called few-shot prompting.

Example:

English: Apple →Korean: 사과

English: Dog →Korean: 개

English: Teacher →Korean: ?

The model can correctly output “선생님” by recognizing the input-output pattern.

This demonstrates that even a base model can generalize patterns in data through careful prompt engineering.

Turning Base Models into Assistants

You can instantiate a language model assistant using only a base model:

Structure the prompt as a conversation between a human and AI assistant.

Include sample turns to guide style and behavior.

Append the user’s actual query.

Example:

Human: Why is the sky blue?

Assistant: The sky appears blue due to Rayleigh scattering, which preferentially scatters shorter wavelengths of light…

The base model continues the conversation, effectively adopting the role of an assistant.

This works because the prompt conditions token generation to mimic conversational patterns.

This approach leverages structured prompts to transform statistical token simulators into practical AI tools, even without fine-tuning.

Key Takeaways

Base models = token-level simulators, not assistants.

Stochasticity leads to diverse outputs; frequent patterns are memorized.

High-quality data can be regurgitated, but rare events are probabilistic.

Few-shot in-context learning allows pattern extraction and task generalization.

Clever prompt engineering can turn base models into functional assistants.

Closing Thoughts

Base models are the foundation of modern LLMs . They compress vast amounts of internet knowledge into parameters and serve as the starting point for assistant models. By understanding stochasticity, memorization, and in-context learning, we can build LLM applications and assistants without training a single parameter from scratch.